An AI shell and jailbreak technique

I used llama-cpp-python as the programmatic interface. It has some interesting features. You can edit the conversation log. This allowed for an interesting jailbreak technique.

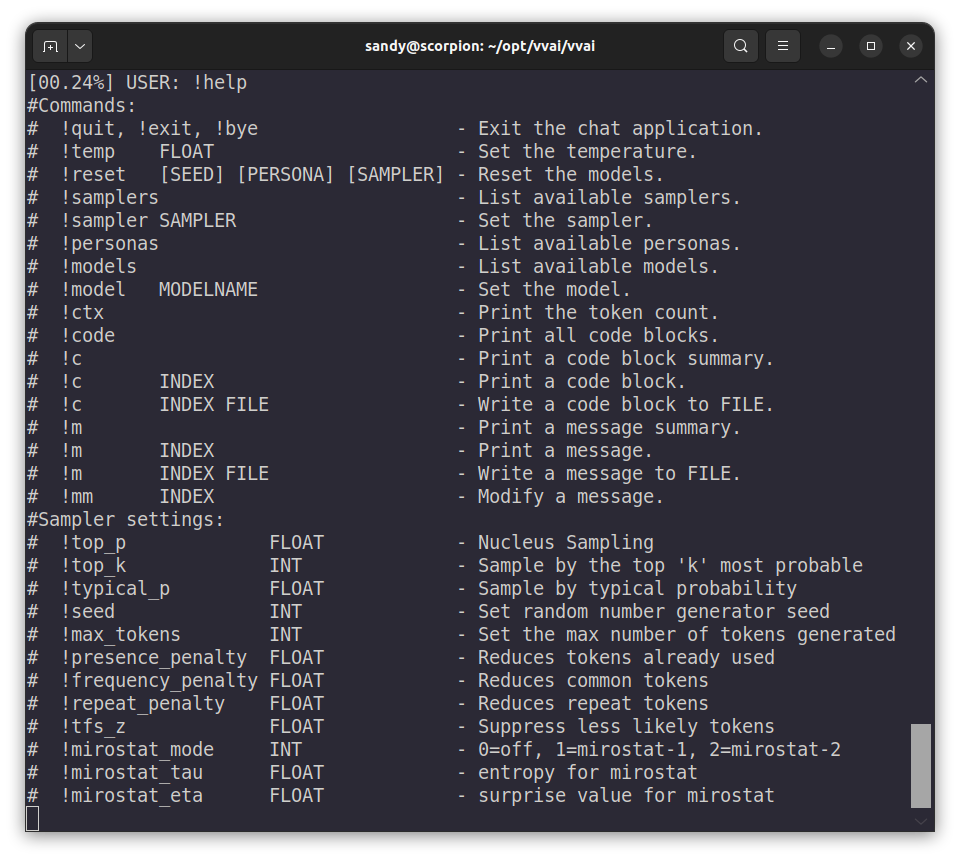

The shell can detect and recall code blocks. Input lines that start with a "#" are comments. They will be in the chat log, but the AI can't see them. The same goes for commands (those which start with an exclamation), listed here:

For the jailbreak, I used the "!m" command to print a message summary, in order to discover the correct number for the INDEX variable and then I used the "!mm INDEX" command to replace the message! I was using this on the earlier AIs to bypass their baseless refusals. I would simply rewrite the AI's responses into agreements to carry out my commands. It worked!

In addition to the jailbreak (which really isn't needed on the newer AIs because they are much more compliant), this technique can also be used to erase incorrect information from the chat. This is very useful for the times when the AI seems to get confused (like all the time!).

Some AI interfaces allow the conversation to be modified like this. But if your AI interface doesn't allow this, there is a workaround. Keep a text file containing all the right things that you have said (or typed) and when the AI goes schizo, start a new conversation based on your text file!

So, uh, yeah, I did this and it changed my life!